People are using AI tools they don’t trust. That’s not a paradox — it’s a warning to companies embracing them.

Earlier this month, we published our first-ever survey of perceptions of generative AI and the companies behind it. We found that while more than 40% of people now use at least one AI tool at least several times a week, only one in five trusts their output.

The survey focused on AI companies, but bigger questions emerged: what does this mean for companies adopting AI and weaving it into their existing products and services? Does it matter if companies embrace a technology that most people don’t trust all that much? Are there potential reputational pitfalls? And what should corporate communications, marketing, and HR teams consider as AI becomes part of their products, services, and operations?

Here are four takeaways from our survey:

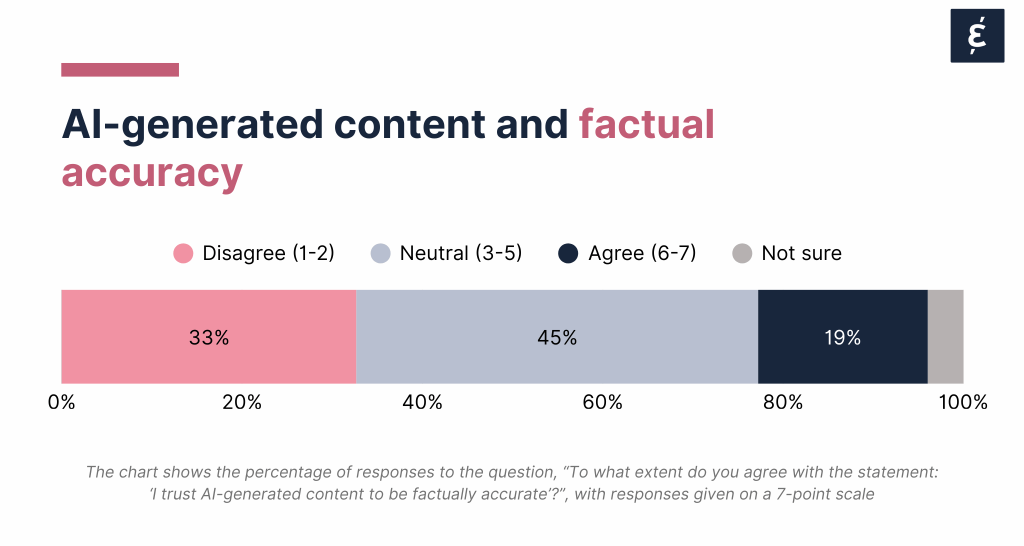

AI is now woven into everyday life — but the public remains unconvinced that it deserves authority. One in three respondents told us they do not trust AI to be factually accurate, while nearly half said they’re neutral.

In reputation terms, neutrality is rarely a safe middle ground; it’s an unclaimed space waiting to tilt positive or negative.

What this means for companies: The window to define your AI story is now. Those who take ownership of their narrative — by showing where and how meaningfully human judgment still leads — will fare better than those who assume silence reads as confidence.

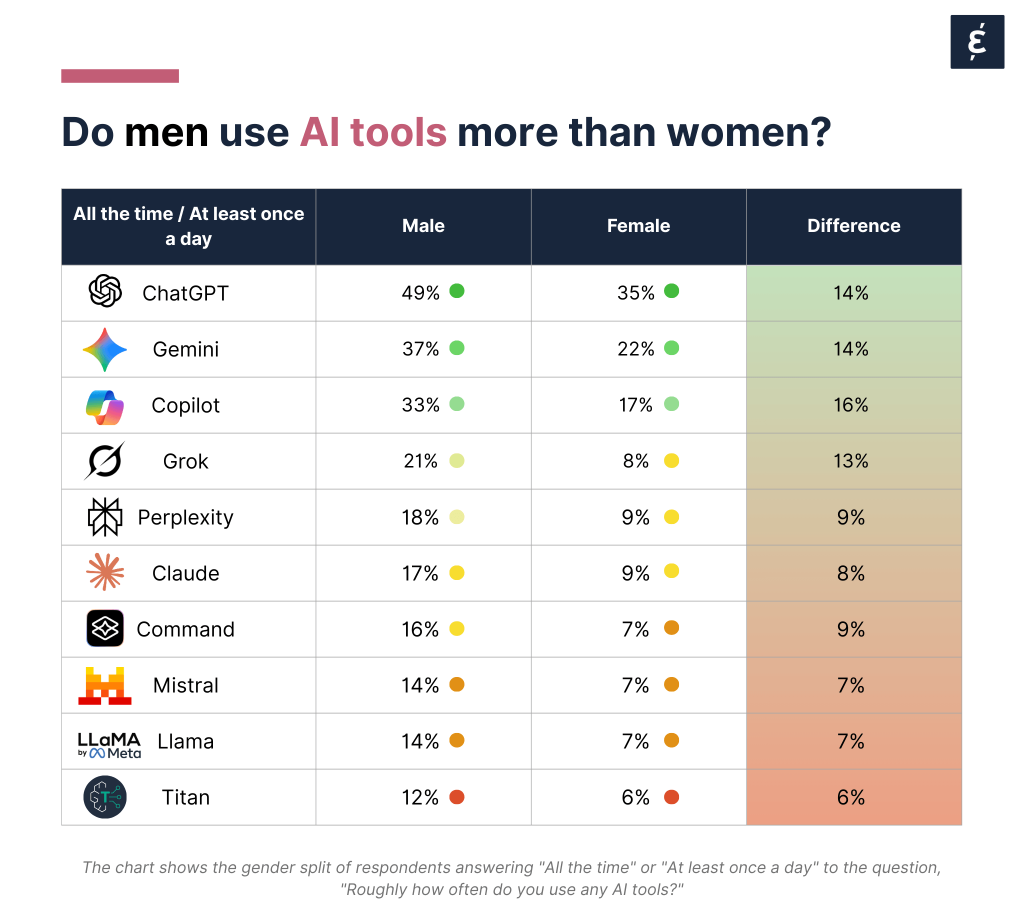

Men are using AI tools at significantly higher rates than women across every major platform, from ChatGPT to Gemini to Copilot. Women are also less likely to believe that AI companies use their technology responsibly and in the public interest.

This imbalance could be as reputational as it is sociological. If AI’s early adopters skew male, it can reinforce perceptions that these tools are built for, and by, a narrow demographic.

For companies integrating AI into their work, being mindful of inclusion — who benefits, who feels excluded, and who defines the rules — will increasingly become a trust issue, not just an HR one.

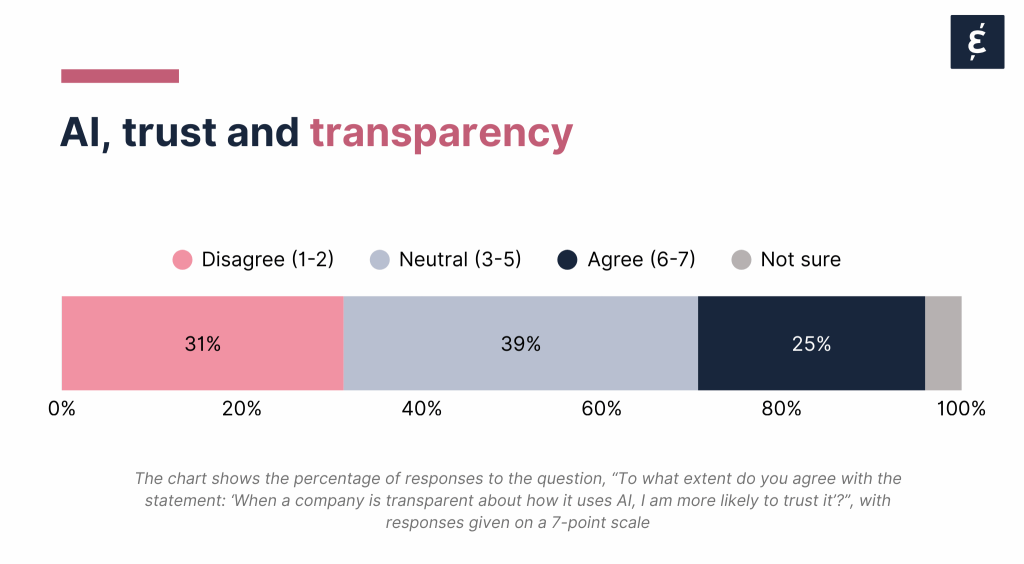

For years, transparency has been one of the corporate world’s most prudent responses to emerging technology risk. But our data suggests that for AI, it’s not yet moving the needle.

Thirty-one percent of respondents say a company being transparent about its AI use doesn’t affect their trust at all, while only 25% say it helps.

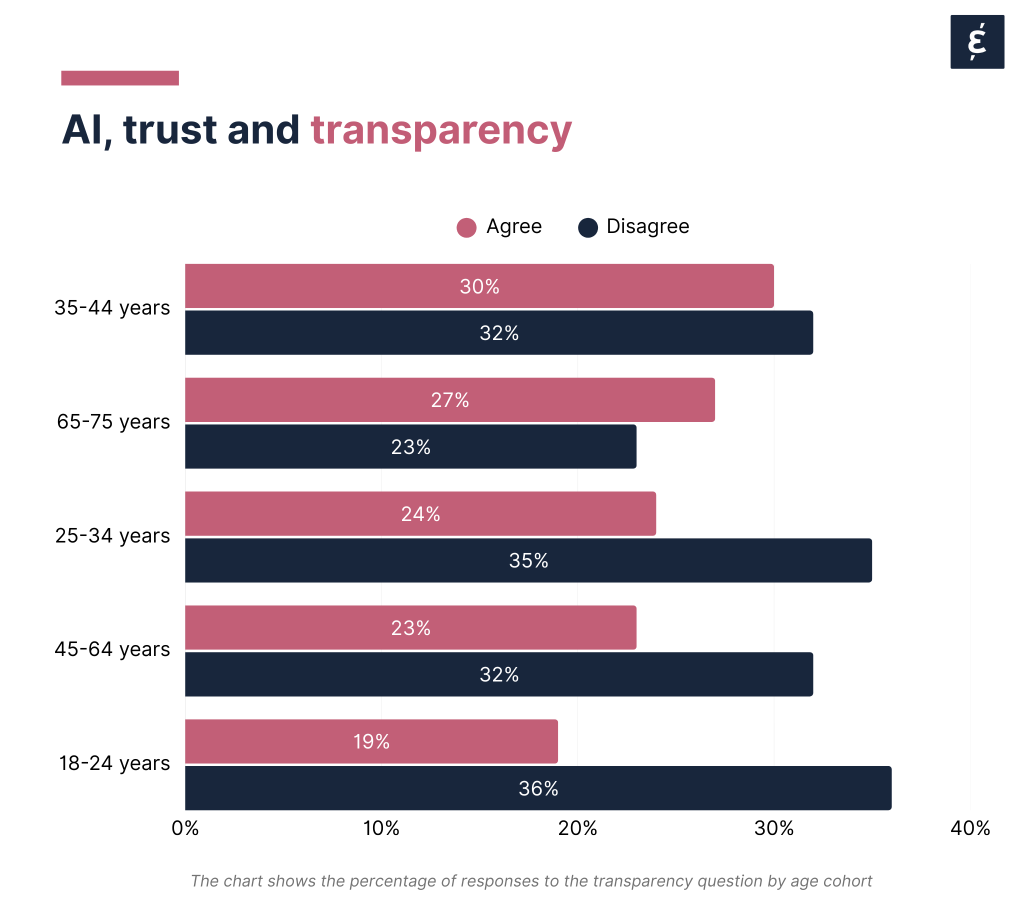

There’s a generational split here: Gen Z and younger millennials remain skeptical even when brands are open about AI use, while older consumers respond more favorably. Transparency may be table stakes, but the data suggests that in this context, for transparency to be truly powerful, it must be coupled with why and how your company uses AI — not just that you do.

What this means for communicators: Move from disclosure to dialogue. People want to see responsibility in action: governance structures, ethical guardrails, and leadership that admits trade-offs.

The most striking finding may be the simplest: most people are still neutral.

They haven’t decided whether to trust AI, its makers, or the brands that deploy it. That neutrality is not indifference. It’s an opening.

For companies, this is a rare chance to shape sentiment before it hardens. Leaders who articulate clear principles — why they use AI, what limits they set, and how they safeguard human judgment — will define the standards everyone else is measured against.

The data on AI trust is a signal, not a verdict. Companies that listen to and act upon that signal now will be better positioned to build enduring trust as technology — and public sentiment — evolve together.