The AI boom is well underway. Millions of people now use tools such as ChatGPT, Gemini, or Copilot every day. But what do they think of them? To find out, we ran a survey in the United States to find out which generative AI tools people prefer, how often they use them, and what they think of the companies leading the AI revolution — including their views on trust and transparency in the sector.

Among the conclusions: More than 40% of people now use at least one AI tool at least several times a week, only one in five actually trusts the content those systems produce. Almost half remain “neutral” on the questions of AI’s factual accuracy and whether AI companies use technology responsibly. And only a quarter are more likely to trust a company when it’s transparent about how it uses AI.

This disconnect is shaping reputation in real time. It means that companies can’t rely on excitement about innovation to carry them through the next phase of AI adoption. They’ll need to earn belief, not just attention. And a “silent majority” of cautious but undecided users could ultimately shape AI’s social license to operate.

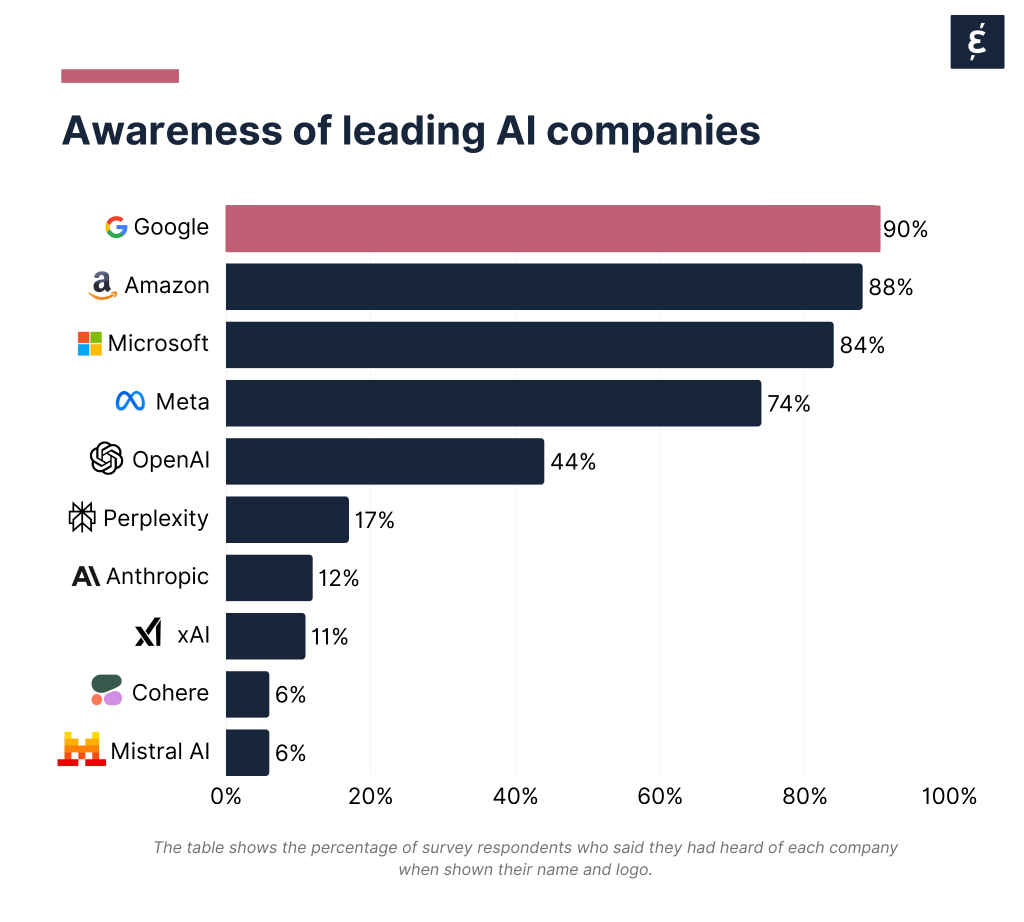

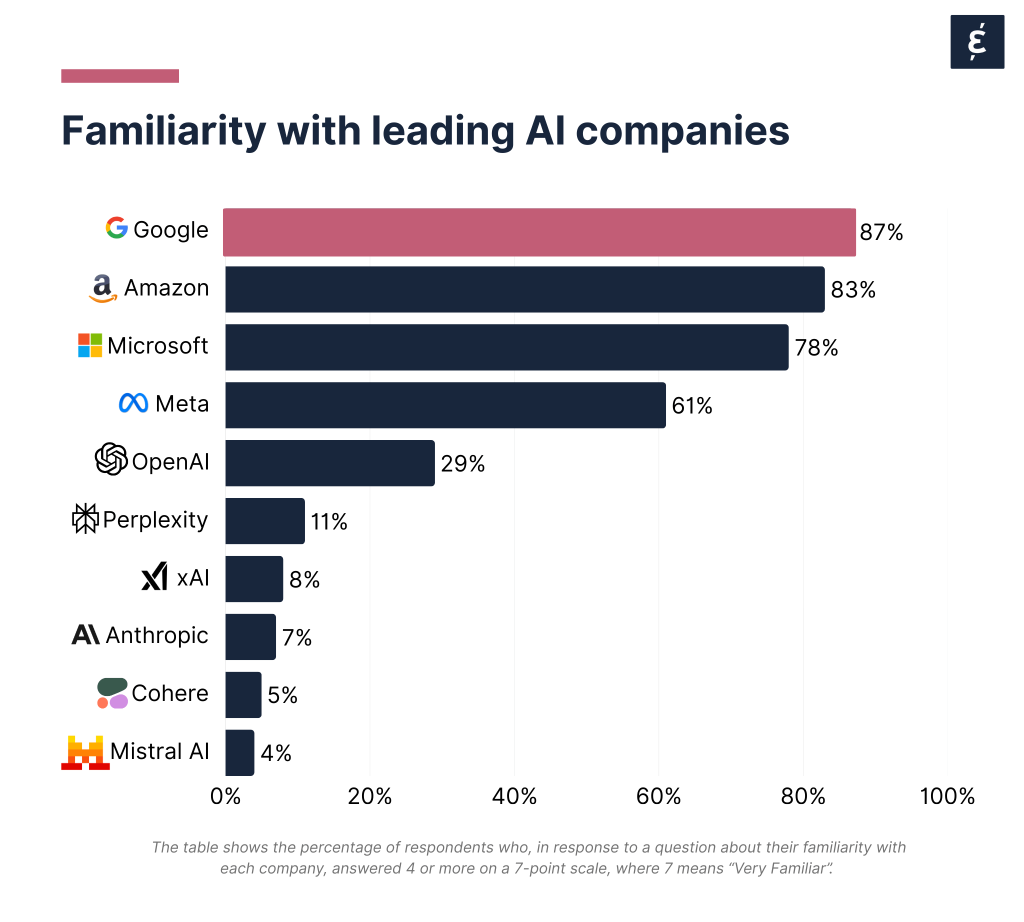

Our survey reveals a two-speed AI landscape. Big Tech — Google, Amazon, Microsoft, and Meta — dominates mindshare. More than eight in 10 respondents have heard of Google, Amazon, and Microsoft, while three-quarters know of Meta — before a steep drop-off in awareness.

Familiarity follows a similar pattern: the giants of Silicon Valley remain well ahead of the pack, though a quarter of respondents are now familiar with ChatGPT developer OpenAI.

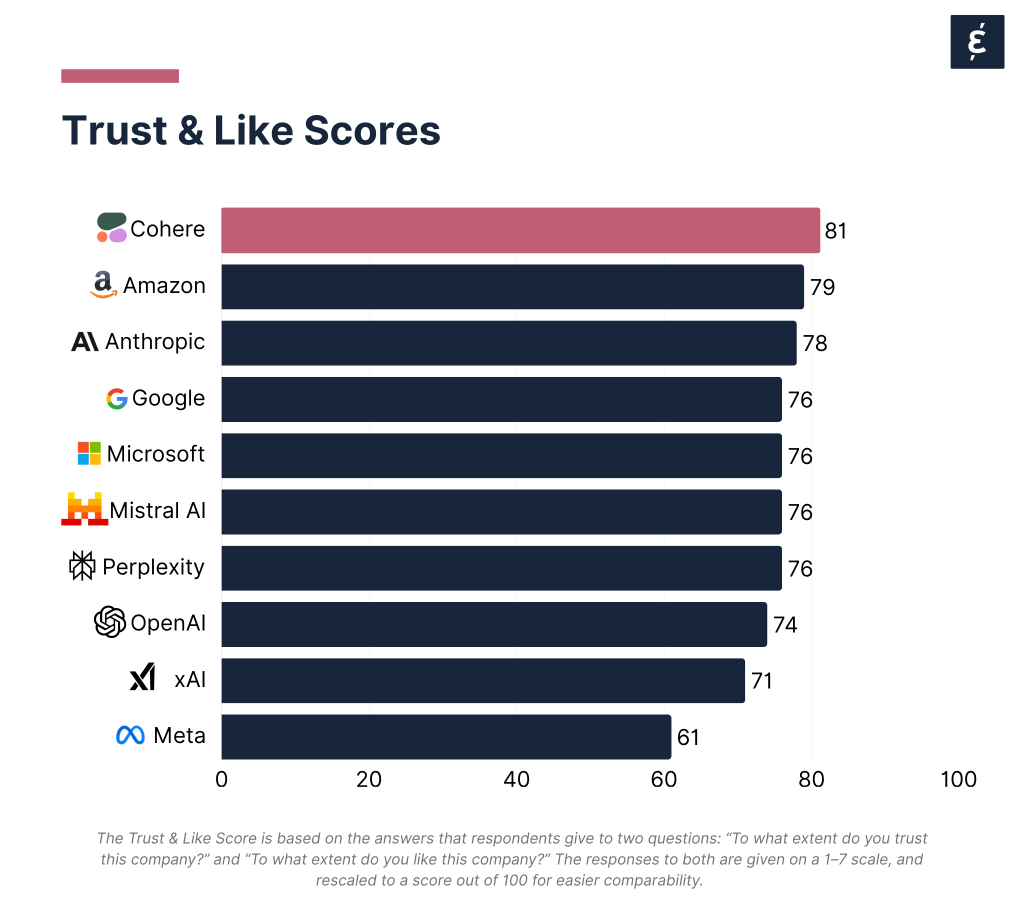

Yet visibility does not automatically translate into trust. While Microsoft, Google, and Amazon maintain relatively solid reputations, Meta has the lowest Trust & Like Score (61 out of 100) — showing that being widely known is not the same as being widely trusted.

Meanwhile, the opposite is true for smaller AI companies such as Anthropic, Cohere, Perplexity, and Mistral AI. They barely register in terms of awareness or familiarity, but among the niche audience who do know them, Trust & Like Scores are remarkably high. For example, Cohere tops the chart at 81. This suggests these companies are building credibility with AI-adjacent communities — developers, researchers, or professionals who actively engage with their tools — even if they remain less visible to the general public.

The takeaway: Big Tech may be more visible, but smaller players are more trusted.

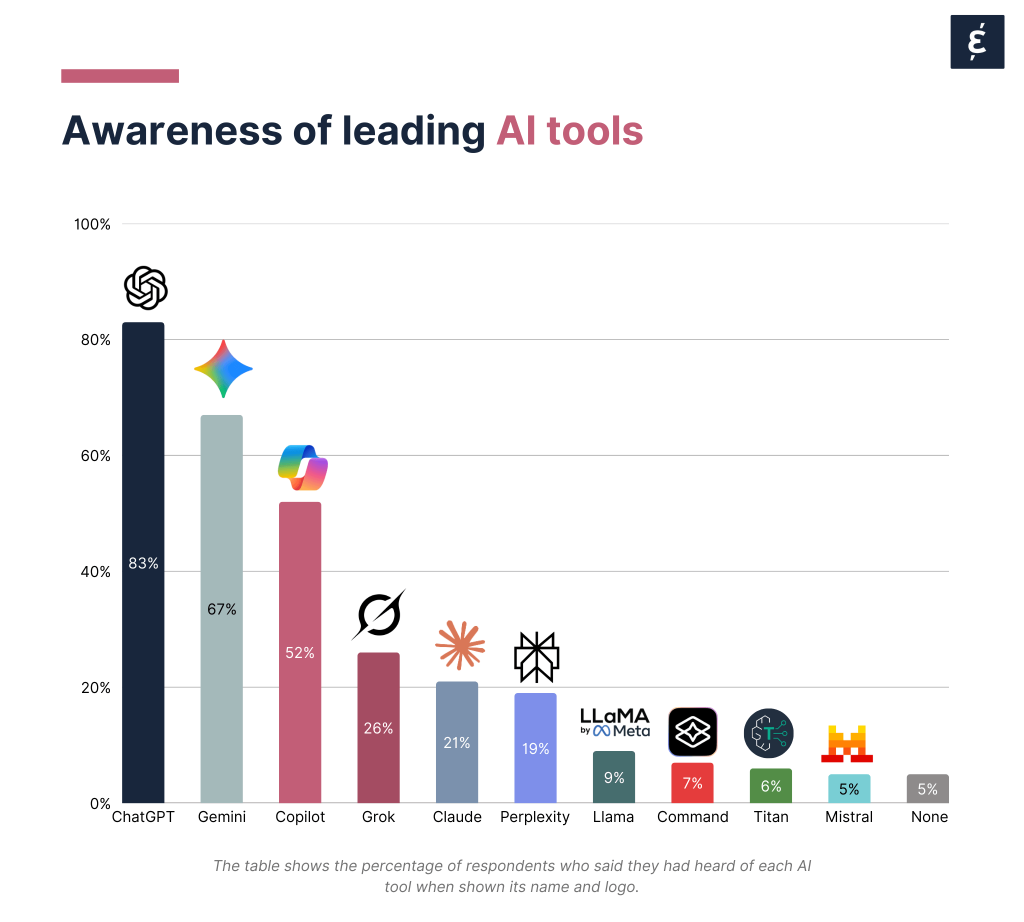

Generative AI tools themselves are what most people encounter directly, of course. Here, ChatGPT towers above the rest: 83% of respondents say they’ve heard of it, compared with 67% for Gemini and 52% for Copilot. Other tools — Grok (26%), Claude (21%), and Perplexity (19%) — register modestly, while Titan, Command, and Mistral are known by fewer than one in ten people.

Usage mirrors recognition.

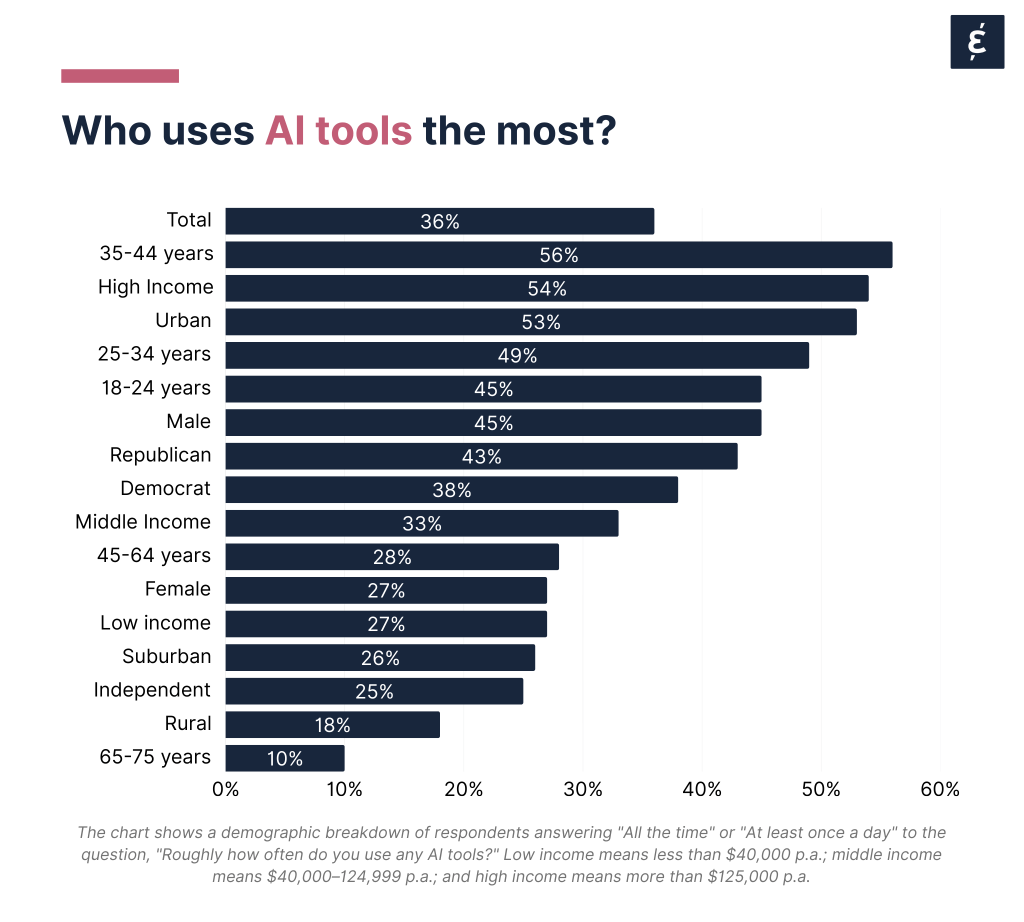

Usage is especially high among 35–44 year olds, high earners, and urban residents, with more than half in these groups using AI daily. The youngest cohort (18–24) also leans heavily toward ChatGPT, while rural and low-income respondents lag significantly.

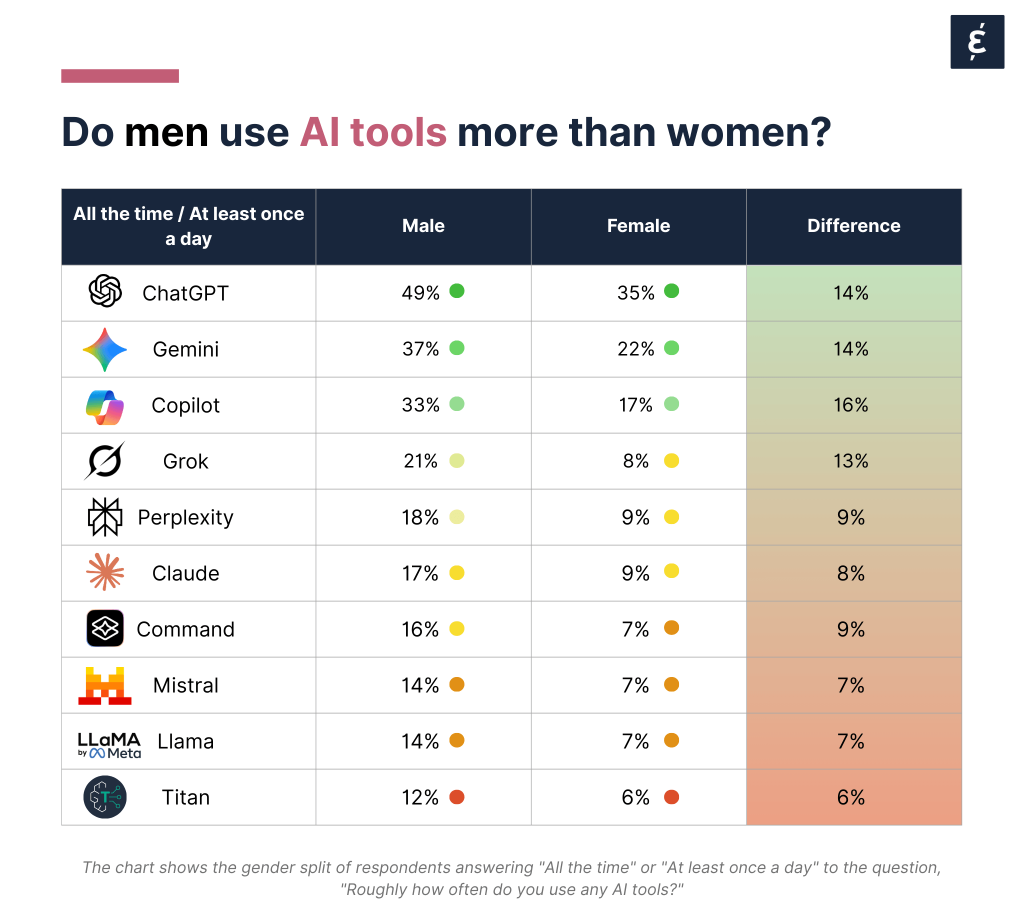

There are also sharp gender gaps: men are consistently heavier users than women across every tool. For instance, 49% of men say they often use ChatGPT, compared with 35% of women.

Despite high usage levels, distrust outweighs trust when it comes to AI companies and their products.

The rest? A strikingly high proportion — around 40–45% — answered Neutral. These “fence sitters” may not yet be convinced one way or another, making them pivotal for shaping future perceptions. At present, however, skeptics outnumber believers among those who have taken a position.

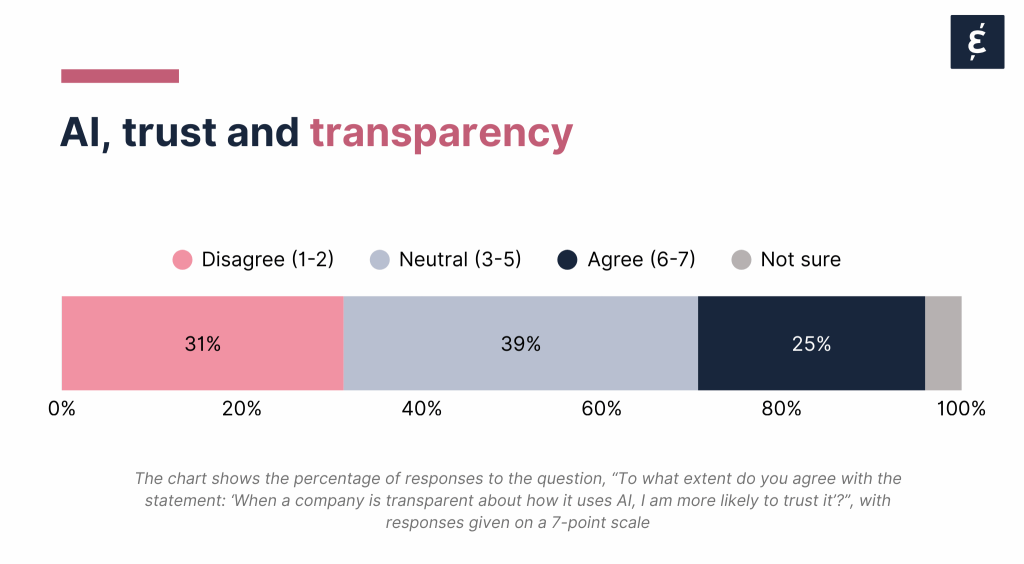

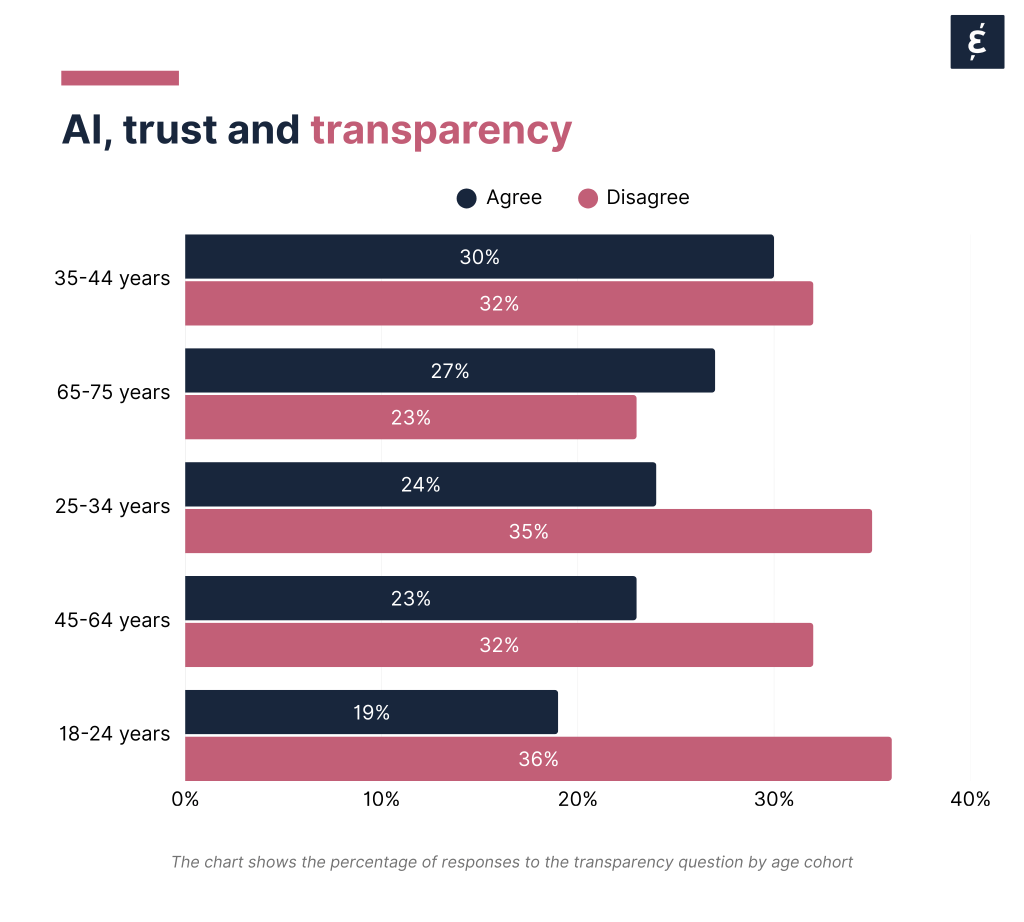

And what of companies that adopt AI? Asked if a company’s openness about how it uses AI would make them more trusting, 31% of respondents disagreed, while only 25% agreed — again, with nearly four in ten (39%) staying neutral. This challenges the standard corporate mantra that “transparency builds trust” and suggests that for companies that weave AI tools into their workflow, transparency about it might be insufficient, with broader perceptions of AI impacting how they themselves are perceived.

Why might AI companies’ transparency fail to resonate? Hard to say, though many may see it as PR spin, not substantive change; others may be uneasy with AI itself, regardless of explanation; and some may find disclosures too complex to reassure, or distrust corporations in general.

Demographically, opinions split sharply. High earners, Republicans, and urban residents appear in both the most positive and most negative groups, suggesting they are more polarized than other cohorts.

Compared to older cohorts, Gen-Z is notably less trusting of companies that use AI even when they’re transparent about it.

As with the use of AI tools, there are some gender gaps.

While women are as likely as men to say that “when a company is transparent about how it uses AI, I am more likely to trust it” (both 26%), they’re notably less likely to “trust AI companies to use their technology responsibly and in the public interest” (17% of women agree with this statement v. 22% of men).

Women are also less likely to trust AI-generated content to be “factually accurate” (16% v. 21%).

Our survey reveals a nuanced picture:

For brand, HR, and communications teams at companies that are increasingly adopting AI or weaving it into their core operations, the key takeaways are these:

1. AI is widespread, but trust lags: one-third distrust its accuracy, and half are neutral. But neutrality can shift either way. Companies must proactively shape their AI narrative now, emphasizing where human judgment still leads.

2. The gender gap could become a credibility gap. Men use AI tools far more than women, who also doubt AI companies act responsibly. This demographic imbalance risks making AI seem built by and for a narrow group. Inclusion in AI — who benefits and who decides — is becoming a trust issue, not just diversity concern.

3. Transparency helps, but it’s not enough. Being transparent about AI use doesn’t necessarily boost trust: only 25% say it does, 31% say it doesn’t matter. Younger generations remain skeptical despite openness. Companies must go beyond disclosure to explain why and how they use AI, showing governance and ethical safeguards.

4. The opportunity: define your values before the public does. With most people remaining neutral on AI, companies can shape sentiment now by articulating clear principles: why they use AI, what limits they set, and how they protect human judgment. Acting early positions them to lead as standards form.

Caliber monitors how thousands of companies around the world are perceived on a daily basis through automated online interviews with real people across various stakeholder groups.

This study is based on interviews with 1,177 people in the US between 2 September and 14 September 2025.

The respondents are randomly selected, and the sample is representative of the national population in terms of gender, region, and age within the age span of 18 to 75.

The representative nature of the sample in this study is achieved solely by setting demographic quotas. There is no weighting of raw data or results.

You may also be interested in: